Connection to DriversCloud Create a DriversCloud.com account Reset your DriversCloud.com password Account migration

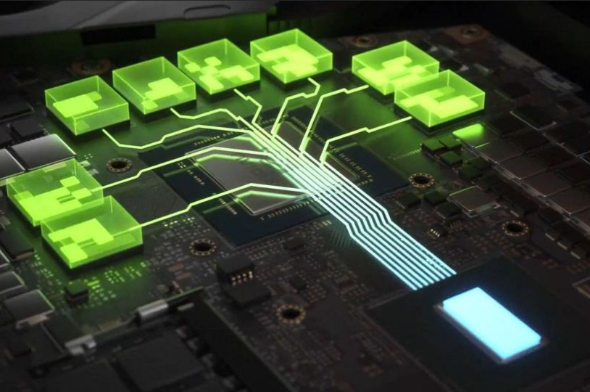

When NVIDIA rethinks texture compression

A new compression technique to monopolize less video memory while enjoying more details.

While NVIDIA continues to improve its graphics solutions with new generations of video processors every few years, it also improves the software environment of its products. For example, almost all gamers are now familiar with Deep Learning Super Sampling or DLSS, a technique that reduces the calculations imposed on the graphics card by asking it to calculate a scene in a lower image definition than the one that will actually be displayed: the artificial intelligence then takes care of setting the image to the right size without the rendering being so different from the native mode.

Last week, a long technical article published on NVIDIA's technical site - research - was the occasion to present a technique that is still in the works, but already very promising. The idea here is to come back to the compression of textures. Currently, this compression is already very effective, but it still requires too much space in video memory while reducing the level of detail compared to the texture initially created. A team led by Karthik Vaidyanathan has therefore presented its first work on what has been called " neural compression ". Mr. Vaidyanathan explains that the goal of his team is to achieve a significant increase in the level of detail of the textures while reducing at the same time the space occupied in video memory. What may seem like an insurmountable task is actually well on its way, as NVIDIA is already delivering four times the definition for textures that still have a 30% reduced impact on video memory.

Presented this way, it seems to be almost magical, but Karthik Vaidyanathan and his team will make a more detailed presentation of this " neural compression " on August 6. At the moment, he specifies that the objective was to go significantly further than the techniques currently used (AVIF and JPEG XL). Thus, the engineers wished to preserve a definition of 4 096 X 4 096 points - the same one as the native texture - whereas the preceding techniques reduced it to 1 024 X 1 204 points with, with the key, an enormous loss of details. Without going into the technical details, Karthik Vaidyanathan simply points out that compared to the most commonly used block compression algorithms, there is no need for custom hardware: NVIDIA's neural compression uses a matrix multiplication method, which is accelerated by all modern GPUs. A very promising solution to avoid further increasing the amount of video memory in future generations of graphics cards.