Connection to DriversCloud Create a DriversCloud.com account Reset your DriversCloud.com password Account migration

GTC 2022: Hopper H100 GPU and Grace Superchip CPU, a heavyweight from NVIDIA

While the general public is eagerly awaiting the arrival of a new generation of graphics cards, NVIDIA is also targeting the professional market with products that are, to say the least, muscular.

The GPU Technology Conference (GTC) is organized by NVIDIA in California. For the 2022 edition of this GTC, the American company had put the small dishes in the large to present to the amateurs two major novelties of its catalog of chips for the data centers. If we know NVIDIA for its GeForce graphics cards, it has for a few years now taken a significant place with the manufacturers of data servers and intends to make more and more shade to Intel (CPU Xeon) and AMD (CPU EPYC).

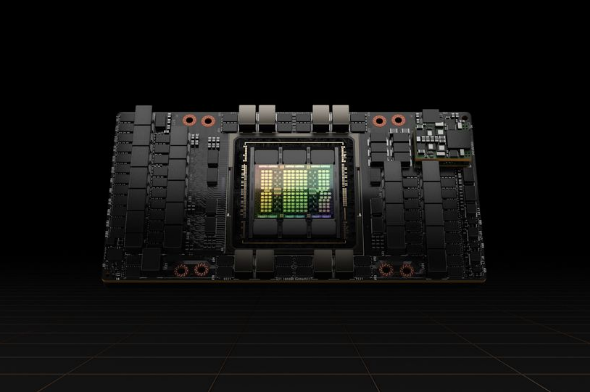

To do this, NVIDIA first lifted the veil on the Hopper generation of chips. To do this, it did not go by four ways by presenting the H100 which should be the spearhead of its new range and replace the aging - it is "already" two years old - A100 of the Ampere range. Evoked by various rumors for several months already, the H100 is a simply monstrous GPU, even if the number of integrated transistors is not as important as we could believe. While many rumors had us believe that the number of transistors would be a mind-boggling 140 billion, we will have to be satisfied with 80 billion. This represents a clear leap forward compared to the A100 and its 54.2 billion transistors.

Moreover, NVIDIA has partnered with TSMC for the production of this GPU and contrary to what we could believe, it will not be the N5 process of Taiwan, but its current flagship, the N4, for more finesse. NVIDIA also specifies that its GPU will be associated with HBM3 memory and not just any amount: we're talking about 80 GB all the same! The most powerful version of the H100, the H100 SXM, is presented for a FP64 computing power of 30 teraFLOPS when its little brother, the H100 PCIe is limited to 24 teraFLOPS. Finally, as if that wasn't enough, NVIDIA mentioned the HGX H100 system integrating no less than eight H100 GPUs communicating with each other via fourth-generation NVLink.

Another presentation, another way to impress his world, NVIDIA wanted to confirm that the failure of the acquisition of ARM does not weigh in its ambitions. The American company has therefore lifted the veil on what it calls the Grace CPU Superchip, a processor entirely designed around the ARM architecture and above all intended to equip data centers. NVIDIA says it will " deliver the highest level of performance, twice the memory bandwidth and twice the energy efficiency of today's best server chips " with its new chip.

The Grace CPU Superchip is actually the product of assembling two Grace CPUs connected in NVLink C2C. In total, the beast integrates 144 ARM Neoverse cores for remarkable computing power. It is backed by LPDDR5x memory with ECC error correction and an impressive 1TB/s bandwidth. To show off the muscle, NVIDIA compares the performance of its Grace Superchip CPU to a DGX A100 system. This one is based on AMD EPYC Rome 7742 chips with 64 cores. On SPECrate_2017, the NVIDIA component would be 1.5x faster. That alone. Of course, we have to take a step back when it comes to performance on this kind of presentation.

However, NVIDIA is clearly thinking ahead, even if the Grace CPU Superchip is still under development: the chip is not expected to be released before the beginning of 2023, which is a good time for the competition, since AMD is expected to release the new generation of EPYC CPUs, the Milan. A nice battle in perspective.