Connection to DriversCloudCreate a DriversCloud.com accountReset your DriversCloud.com passwordAccount migration

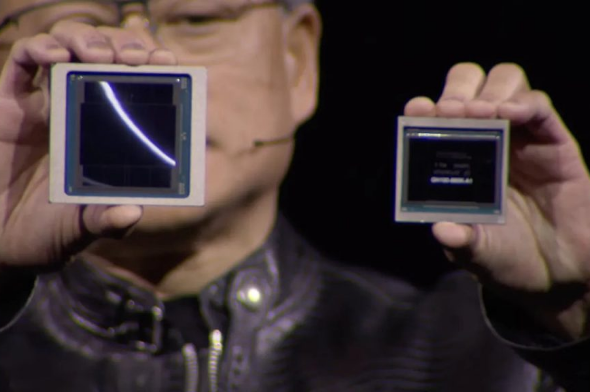

Blackwell B200: NVIDIA prepares a monster processor designed for artificial intelligence

NVIDIA will soon be releasing the most powerful chip in its history to support the AI revolution.

The artificial intelligence revolution is underway, and NVIDIA is undoubtedly one of its main architects. Artificial intelligence requires components capable of processing vast quantities of data and offering enormous computing power. These are the characteristics that perfectly define the Hopper generation processors brought to market by NVIDIA. However, the U.S. company has to contend with the rise of ever more powerful competitors and, in order not to lose its leadership, intends to offer a new generation of processors in the very near future.

A generation that NVIDIA CEO Jensen Huang unveiled at GTC 2024, his own technology conference. In the coming months, the Blackwell generation of chips should enable NVIDIA to offer a quality successor to Hopper. Chips named B100, B200 and GB200 were presented, with nothing less than "the largest processor allowed by current technologies" to define the B200. Indeed, the GB200 is a very special solution, combining two B200s with an NVIDIA Grace processor for optimum performance.

In itself, the B200 is already a monster of technology, a monster of power. The beast is designed around two cores of 104 billion transistors each, for a total of 208 billion transistors. This more than doubles the number of transistors in the Hopper-generation H100. Such a feat is made possible by the use of TSMC's N4P etch node, a 4 nm node that enables the integration of ever more transistors while ensuring greater energy efficiency. NVIDIA prefers to emphasize the raw peformances of its new baby, evoking " 2.5x the performance of the previous generation ".

Jensen Huang did take the time to point out that the B200 chip features a 10 TB/s interconnect, so that the two 104-billion-transistor cores can communicate under the best possible conditions. There's also mention of a 4096-bit memory bus operated by 192 GB of HBM3E memory for a bandwidth of 8 TB/s. This is "the engine of a new industrial revolution", according to NVIDIA's CEO.